I started my undergraduate studies in economics in the late 1970s after starting out as…

Whatever .. its a macroeconomic problem

In the Financial Times this morning there was a thought provoking article by Mort Zuckerman entitled The free market is not up to the job of creating work which is in stark contrast to another article – Goodbye, Macroeconomics, which appeared last week in the FT and was written by Eli Noam. The former seems to understand the depth of the problem and has the right priorities but doesn’t come up with the right policies. The latter raises some interesting points but just misunderstands the nature of macroeconomics.

Zuckerman says that the:

“US lost 44m jobs in the last two decades of the 20th century, but simultaneously created 73m private sector jobs. A stunning 55 per cent of the total workforce was in new jobs by the turn of the century, two-thirds of them in industries that paid more than the average wage. This is no fluke. It is because we benefit from a unique brand of entrepreneurial bottom-up capitalism.

He notes that the US labour market has now shed millions of job and in the period since growth several disturbing trends have emerged. Americans have gone from being savers to “nation of debtors, staggering beneath mortgages that exceed the value of our homes, and credit lines that exceed … [their] … ability to repay”. The two trends – shedding jobs and rising indebtedness are related as modern monetary theory (MMT) allows us to appreciate. More on this later.

Zuckerman despairs the rise of the European and Australian disease – long-term unemployment – which up until now the US has not been particularly burdened with. 15 million unemployed have now been unemployed for longer than 6 months in the US – “highest proportion since records began in 1948” – and hours are being cut dramatically for those who retain work – “to the lowest in 60 years”. Wages growth is non-existent and labour force participation is also falling.

So as Arthur Okun observed long ago – unemployment is the tip of the iceberg. You might want to read this blog – If we don’t, it won’t and won’t need to … – where I discuss this idea and its consequences.

The problem with the US income support system is that they assume that unemployment is only a short-term phenomenon – so benefits are partial relative to wages earned in employment (this is not abnormal) but also run out after a relative short period. Further, a person normally has to have worked full-time in the last job for more than 12 months to qualify. Zuckerman says (and I haven’t verified this – Bruce, is this right?) that “only 43 per cent are eligible for unemployment benefits” because the rest have been in part-time or ephemeral jobs or were not counted as being wage earners (that is, they were independent contractors).

For the so-called richest nation in the World, “food stamps now feed a near-record one in nine Americans”. I was reminded of the film The Soloist, especially the scenes around the LAMP collective which were truly horrific. I also thought of the changes I have seen over the last 20 years in my regular visits to my favourite (though this is now questionable) city – San Francisco. There I see families (2-3 generations) homeless and tragic.

Zuckerman says:

This is the only recession since the Great Depression to wipe out all job growth from the previous business cycle. The broader measure of unemployment, the “household index” encompassing people who are unemployed and underemployed, has reached a record 17 per cent. The household survey revealed staggering job losses of 785,000 for September. It includes about 571,000 people who dropped out of the workforce last month, presumably because they despaired of finding work.

The probability of finding a job has plunged in the US (see next section) and long-term unemployment entrenches that situation. This is one of the real problems of allowing an economy to lose so many jobs – a cohort gets locked into unemployment even when the economy starts producing work in reasonable proportions. The new entrants always appear more attactive to employers and the long-term unemployed miss the growth boat and entrench intergenerational disadvantage as their kids also get set up for long-term labour market problems.

We have clearly seen that in Australia since the 1991 recession. At the up-coming CofFEE conference, which I host in December each year, I will have a paper myself on that theme. As an aside, this is a great annual gathering of modern monetary theorists from all over the World and you are all welcome to come along and join in the debates.

I could go on detailing the pathology in the US labour market – unemployment-vacancy ratio at 6 (up from 1.7 at the top of the cycle); around a quarter of teenagers without work; rising prime-age male unemployment; women’s share of the employed labour force rising dramatically but pushing average wages down as a consequence; many traditional jobs in manufacturing and finance are gone forever etc. Very bleak.

The implied dynamics for the US are also bleak. The jobs that are being created are typically in low-skill personal care services where the prospects for innovation (and hence productivity and real wages growth) is restricted.

The damage that a failure to address this problem head-on (that is, to create jobs) is staggering. While there are growing newspaper page columns every day now on the fall of the “mighty dollar” which is not really an issue at all – given that if people want to reduce their holdings of the USD they have to spent them on US-priced goods and services which will be a boost to aggregate demand – they don’t just disappear which is what a lot of commentators leave the innocent reader wondering – there is not much written about how bad the next decade in the US will be mopping the labour market mess up.

I think the bias to financial matters reflects the destructive legacy of the neo-liberal years – we fail to prioritise real problems and have anxiety attacks about the phony issues.

So what does Zuckerman want done? Well he says:

Only massive programmes are equal to the challenge of restoring stable growth to our economy. One such programme would be to establish a National Infrastructure Bank … to which the government would assign the $65bn (£40bn, €45bn) annually allocated to support infrastructure construction nationally. The bank would have the capacity to borrow, with federal guarantees, an additional $200bn. This programme would ensure a rational rather than a political investment in infrastructure, and provide long-term infrastructure development on a major scale with a maximum multiplier effect on the economy. A second programme would be a 100 per cent tax credit for increases in research and development by American businesses … There is no time to lose.

I agree with the idea of national infrastructure banks which can oversee large public infrastructure projects. One of the neo-liberal inventions has been the public-private partnership (PPP) approach to large-scale infrastructure development which has been fostered by governments under the false assumption that they are revenue-constrained (national level) or should never borrow (state level). This is a disease in Australia. The problem is that the who pattern of natinoal infrastructure development has changed as private equity interests drive the planning and implementation processes to suit their own interests rather than to advance public purpose. There are countless examples of this conflict of interest in the UK and Australia alone over the last 15 or so years.

The problem with both elements of Zuckerman’s plan is that there is no time to lose. While the initiatives he proposes should perhaps be considered (although I don’t support tax credits to private enterprise) they will not produce enough jobs quickly enough to arrest the atrophy that is already mounting.

Further, the sort of ventures he desires – which are intrinsically desirable no question – tend not to employ low-skilled workers who have been unemployed for long periods of time and who may have other dimensions of disadvantage as a consequence (mental issues, etc). They need accessible jobs to be made available.

In my view the US government has to start getting tough with the top-end-of-town (no more handouts) and, instead, start creating on a national and large-scale jobs that will be suitable for low-skill workers. I would pay them the minimum wage but, in saying that, I would also up the minimum wage to be something that was affordable and permitted individuals to live in decent accomodation and participate fully in the community. The current US minimum is not sufficient to allow this.

The Obama adminstration is overseeing a disaster at present and while it might say it was not of its own making – the reality is that the solution is all of its making. And at present it has failed dramatically to go about solving the problem in the most effective way. But what would you expect when he is getting swamped by Larry Summers and other mates from Wall Street.

Brief digression – What are the transition probabilities in the US?

Zuckerman says that it is now harder to get a job in the US. I decided to investigate that – I was already working on some gross flows analysis anyway for the US as part of another project.

Gross flows analysis provides a useful alternative way to interpret the state of the labour market. It allows us to trace flows of workers between different labour market states over some period. In the case of the US data they publish three working age population divisions (employment; unemployment; and non-participation), whereas Australia breaks employment down into full-time and part-time.

The data allows us to use this data in a Markovian sense, which while placing some restrictions on how we think of the data evolution conceptually does provide a basis for computing the so-called transition probabilities. In this context, transitions are changes of state (for example, a shift from unemployment to employment) and the probabilities which are computed for each of the these changes are called transition probabilities. So if a transition probability for the shift between employment to unemployment is 0.05, we say that a worker who is currently employed has a 5 per cent chance of becoming unemployed in the next month. If this probability fell to 0.01 then we would say that the labour market is improving (only a 1 per cent chance of making this transition).

You might like to read this blog – The labour market is turning … down! and this one that followed it – What can the gross flows tell us? – for further information about how this sort of analysis is undertaken and some of the problems with it.

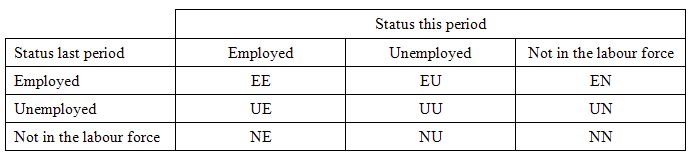

The following table shows the schematic way in which gross flows data is arranged each month – this is the so-called a Gross Flows Matrix. Here E is employment, U is unemployment and N to not-in-the-labour force. Gross Flows matrix can be interpreted straightforwardly. For example, the element EE tells you how many people who were in employment in the previous month retained their status in the current month. They might not be in the same jobs but they are still employed. Similarly the element EU tells you how many people who were in employment in the previous month are now unemployed in the current month . And so on. This allows you to trace all inflows and outflows from a given state during the month in question.

The transition probabilities are then computed by dividing the flow element in the matrix by the initial state. For example, if you want the probability of a worker remaining unemployed between the two months you would divide the flow UU by the initial stock of unemployment, Ut-1. If you wanted to compute the probability that a worker would make the transition from employment to unemployment you would divide the flow EU by the initial stock of full-time employment, Et-1. And so on. So for the 3 states we can compute 9 transition probabilities reflecting the inflows and outflows from each of the combinations.

These flow probabilities are called ‘transition’ rates and they determine the relative number of persons in each labour market state. Given that the unemployment rate is the number of unemployed workers expressed as a fraction of the labour force, flow probabilities also determine the unemployment rate.

I used monthly data from the US Bureau of Labor Statistics – specifically two text files are apposite – the data file and the explanatory notes which allow you to determine what the columns in the raw data file are. I used the seasonally adjusted monthly data and adjusted the populations for 3 flows that the BLS provides with emanate from an “Other” category. They are small and reflect coding errors in the survey instrument. So they went out.

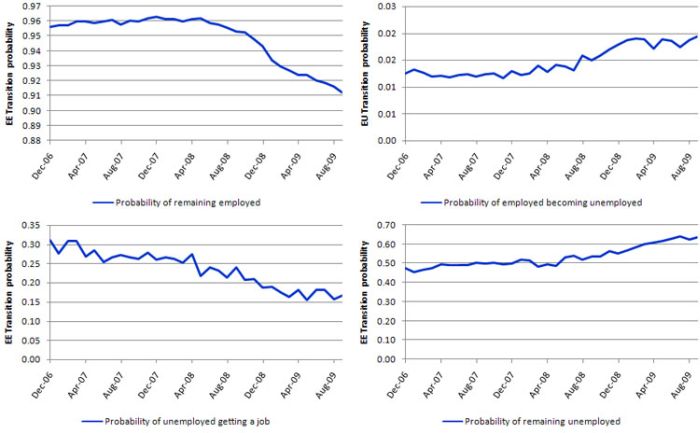

The low-point unemployment rate in the last US cycle was December 2006 and then it went sour after that. I computed the various transition probabilities from that date to September 2009 and I report them in the following graph. The top row captures the EE transition probabilities (that is, retaining employment) and the EU (losing a job and becoming unemployed). The bottom row captures the transition probabilities from UU (that is, remaining unemployed) and EU (getting a job if you are unemployed).

The decline in the US labour market is evident. In December 2006, the EE probability was 96 per cent (very high) and it has now fallen to 91 per cent. This is a very sharp plunge. Similarly, the EU probability has gomne from 1.2 per cent to 2 per cent. The UU has gone from 47.3 per cent to 63.8 per cent (a sharp rise) and the UE probability has fallen from 31 per cent to 17 per cent. If I compared these to what is happening in Australia at present you would be shocked. While our transitions reveal our situation has worsened they stand in stark contrast to the US case. The dynamics revealed by the US gross flows are as bad as I have seen.

Goodbye, Macroeconomics

I meant to write about this last week but I wrote enough already and was quite busy getting all the reports and documentation ready for the Central Asia mission. But it is worth reflecting on because it marks a new trench that is opening up in the battle against fiscal policy.

The writer, one Eli Noam starts off by saying:

We are in the midst of a severe economic crisis, the second in about a decade, and the third for Latin America and Asia. It appears that information based economies are volatile. This is partly due to the fundamental price deflation in some of the core information services and products, and partly due to the much greater speed of transactions that outpace the ability of traditional institutions to cope. Information technology contributes to the volatility. But can the same technology also provide new tools for stabilisation?

I agree that the rise in IT technology (especially software) has changed things dramatically in ways that we are still not fully understanding. The marginal costs of producing these goods are zero and that has significant implications (not covered in this blog) for the conduct of product markets. Further, open source movements are making it hard for a commercial model to dominate the Internet. The capitalist class has been trying to take over the Internet since they realised (rather late) what was going on. So far, they have only penetrated marginally. MSNs attempt to make the protocals proprietory failed. I predict attempts to charge for news content will be undermined. Viva!

But Noam ties this into what he sees as a failure of macroeconomics. He says:

… when the present economic crisis hit, governments dealt with it in a traditional way through broad-based stimulus spending and through interest rates. But it is unclear whether the remedies of the industrial age apply. Demand is not the main problem of the information economy. People consume more bits and minutes than ever. The problem is prices, together with the inability to monetise many information activities. This leads to early over-expansions to gain market share, and subsequent contractions.

This is an interesting point. But it hardly addresses where the major job losses are coming from and why. The problem, as I noted above, is one of being able to commercialise the network. The nature of the network and the characters who are gaining the most skills are both resistant to commercialisation as a matter of ideology (or preference). There is an inherent difficulty for any company that tries to make money on the Internet. But this is not the main game at present.

So what is Noam on about? Well he says:

Nor is the pace of these macro-responses adequate for the accelerating speed of the information economy. By the time the emergency moneys have been actually spent, we are likely to be out of the recession and they might stimulate inflation.

Hmm, how does that work? Inflation is a problem if spending growth outstrips the real capacity of the economy to match it. As long as the emergency (note the bias – deficits are emergencies) spending is targetted at areas where slack real resources are likely to be drawn back into productive activity then there will be no obvious inflation problem in the foreseeable future.

If we reflect back on Zuckerman’s angle that the recession has killed whole productive sectors – like car manufacturing – then the challenge to ensure there are real responses is not trivial. If the recession has really destroyed productive capacity to such an extent that it has gone forever then the stimulus must aim to develop new productive capacity (hence Zuckerman’s recommendations). But I doubt that the scale of productive infrastructure destruction is as great or as inelastic as Zuckerman suggests.

At any rate, the vast proportion of those carrying the jobless burden are not high-skilled workers and so the scope for bringing them back into production is almost unlimited (within the scale of the cohort being considered). Perhaps, the US Government has to think laterally and re-define what they think is a productive activity. The concept of productive employment is very skewed towards only considering activities that underpin private profit to be worthwhile. Witness the responses whenever public sector job creation is suggested.

But all of this has nothing much to do with the speed of information flows despite what Noam would like us believe. I suspect his argument is a new slant on the deficits are inflationary angle.

He continues:

The new type of problem, in contrast, is the enormous flow of computer-based economic activity that is increasingly impenetrable to interpret or respond to. Yet proponents of the traditional tools mostly got upset when the new elements of the economy undermined their traditional tools. As e-money emerged, symposia were full of professors of macroeconomics and central bankers lamenting the difficulty of controlling this new supply of money. In other words, the efficiency of the advanced economy had to serve the efficiency of monetary policy, not the other way around.

Yes, all the losers in my profession that think the government has a budget constraint and have to raise taxes to wipe out deficits or sell bonds before it can spend! They are the ones who considered the Internet to be the death-knell for fiscal policy. But then again they had already decided that fiscal policy was dangerously undesirable.

The “supply of money” new or otherwise is not something that a modern monetary government should focus on. That obsession is one of the residuals of the failed Monetarist era which constructs the monetary system erroneously as: government controls money supply -> money supply growth causes inflation -> uncontrollable money supply growth causes worse inflation. If I had a mainstream textbook here with me I could quote extensive tracts from it to this end.

What the government has to manage is growth in aggregate demand relative to the real supply capacity of the economy. It cannot control the money supply and doesn’t try to. A good sign that demand is getting to strong is that labour underutilisation is disappearing. The US economy is not remotely close to that state and will not be for years.

Further, fiscal policy has all the requisite tools to slow down a high pressure economy should that arise just as it can always expand a flagging economy. One should not equate the strength of fiscal policy with the practice of fiscal policy under the guise of dunces who don’t believe in it in the first place. A lot of what has gone on in the US under the guise of the stimulus package has been a discredit to the capacity of fiscal policy (designed and implemented appropriately) to advance public purpose.

The fact that the US government (like most) do not see the advantages in putting a minimum wage cum full employment floor into the economy via a Job Guarantee, as the first step in boosting the effectiveness of the automatic stabilisers is testimony to their failure to understand how a modern monetary system operates.

Noam then gets onto aggregates

The most important aspect is the ability of the new technology to differentiate and customize. On the internet, each packet is identified as to sender and receiver. Which means that one can identify users, and uses. And if we can identify, we can differentiate. This is very powerful. Traditional macroeconomics was very aggregate. It was their essence. The reasons were two: for theorists, it was easier to write equations that way. And for policy implementation, it was difficult, in very practical administrative terms, to disaggregate the many economic agents in a society.

What? Macroeconomics by definition is aggregate analysis. The reasons had nothing to do with equations and it had nothing to do with “ease” of policy adminstration.

Macroeconomics emerged out of the failure of mainstream economics to conceptualise economy-wide problems – in particular, the problem of mass unemployment. Marx had already worked this out and you might like to read Theories of Surplus value where he discusses the problem of realisation when there is unemployment. In my view (and I might write a separate blog on this) Marx was the first to really understand the notion of effective demand – in his distinction between a notional demand for a good (a desire) and an effective demand (one that is backed with cash). This distinction, of-course, was the basis of Keynes’ work and later debates in the 1960s where Clower and Axel Leijonhufvud demolished mainstream attempts to undermine the contribution of Keynes by advancing a sophisticated monetary understanding of the General Theory.

The point is that prior to this the mainstream thought you could theorise at the aggregate level by adding up the individual demand and supply functions. They soon hit aggregation absurdities so fudged it by defining representative agents (one firm that was the average of all and one household etc). Total nonsense but it persists today.

However, even that didn’t save them. They failed to understand that what might happen at an individual level will not happen if all individuals do the same thing. This is the insight of the paradox of thrift – one person can increase their saving ratio and saving but if we all do it, then aggregate demand fails and income adjustments thwart our attempts.

Similarly, one firm might be able to cut costs by lowering wages for their workforce and because their demand will not be affected they might increase their hiring. But if all firms did the same thing, total spending would fall dramatically and employment would also drop. Again, trying to reason the system-wide level on the basis of individual experience generally fails.

The technical issue comes down to the flawed assumption that aggregate supply and aggregate demand relationships are independent. This is a standard assumption of mainstream economics and it is clearly false.

The general problem is referred to as the fallacy of composition and it is what makes macroeconomics special. Noam doesn’t seem to understand any of that.

Noam then says that:

But now, we have tools that can differentiate. With proper legal authorization, a central bank could charge different overnight rates to different banks or vary reserve requirements. Sales and other taxes could be varied selectively for different products, regions, or users. Tax credits could be tied to spending for particular uses. Stimulus money could go towards spending or investments that are above the level of last year. To give a close analogy: In the past, toll roads could charge motorists only in a very undifferentiated way. But now, with automated billing and stored payment systems, we can charge different prices by time of day, by frequency of use, by the characteristics of the driver, by the characteristics of the car, and by the proximity of a driver’s residence to public transportation alternatives. In sum, we possess a much finer tool than before to stimulate and to depress demand for transportation, and to do so at a lower cost due to the ability to pin-point incentives.

I agree and that is the beauty of fiscal policy – it can differentiate and target. It can contract some areas of the economy while expanding others. Monetary policy cannot do that and relies on questionable distributional assumptions anyway.

Conclusion

So far from macroeconomics being dead, the current downturn and its aftermath will reinforce the primacy of fiscal policy and the ineffectiveness of monetary policy.

The fear is that as the top-end-of-town use the ficsal injections to regain their position at the top of the stack they are now mounting a relentless attack on it for fear that the rest of us (particularly the most disadvantaged workers) will also gain some traction in the recovery.

An understanding of macroeconomics – as it applies to a fiat monetary system – has never been more important.

Now I am off to Almaty – to the Silk Road! More tomorrow – this time on Latvia unless my attention gets diverted.

This Post Has 0 Comments